For the last three years Riemann (and its predecessors) has been a side project: I sketched designs, wrote code, tested features, and supported the community through nights and weekends. I was lucky to have supportive employers which allowed me to write new features for Riemann as we needed them. And yet, I’ve fallen behind.

Dozens of people have asked for sensible, achievable Riemann improvements that would help them monitor their systems, and I have a long list of my own. In the next year or two I’d like to build:

- Protocol enhancements: high-resolution times, groups, pubsub, UDP drop-rate estimation

- Expanding the websockets dashboard

- Maintain index state through restarts

- Expanded documentation

- Configuration reloading

- SQL-backed indexes for faster querying and synchronizing state between multiple Riemann servers

- High-availability Riemann clusters using Zookeeper

- Some kind of historical data store, and a query interface for it

- Improve throughput by an order of magnitude

Write contention occurs when two people try to update the same piece of data at the same time.

We know several ways to handle write contention, and they fall along a spectrum. For strong consistency (or what CAP might term “CP”) you can use explicit locking, perhaps provided by a central server; or optimistic concurrency where writes proceed through independent transactions, but can fail on conflicting commits. These approaches need not be centralized: consensus protocols like Paxos or two-phase-commit allow a cluster of machines to agree on an isolated transaction–either with pessimistic or optimistic locking, even in the face of some failures and partitions.

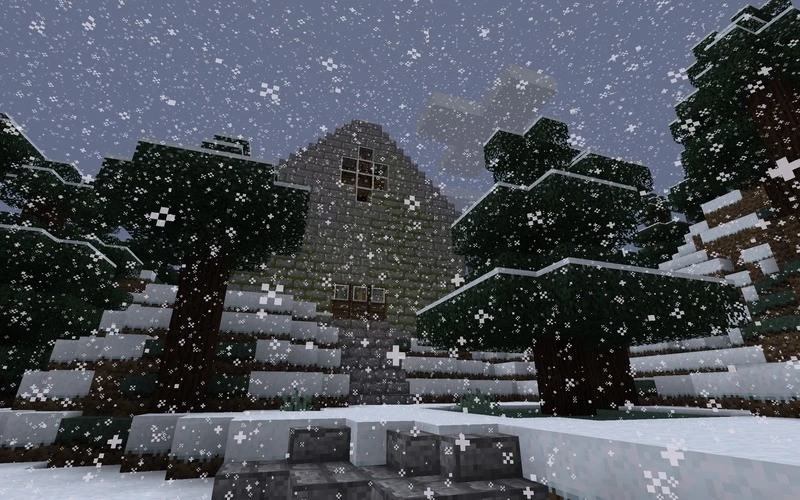

This is the house I grew up in. It was particularly challenging to render in one-meter increments, especially while preserving the positions of walls and staircases. The entire structure wanted to shift around in various dimensions; a tension which is only partly resolved by resizing the stair and kitchen.

I chose a site on a hill, facing west; echoing the original lot.

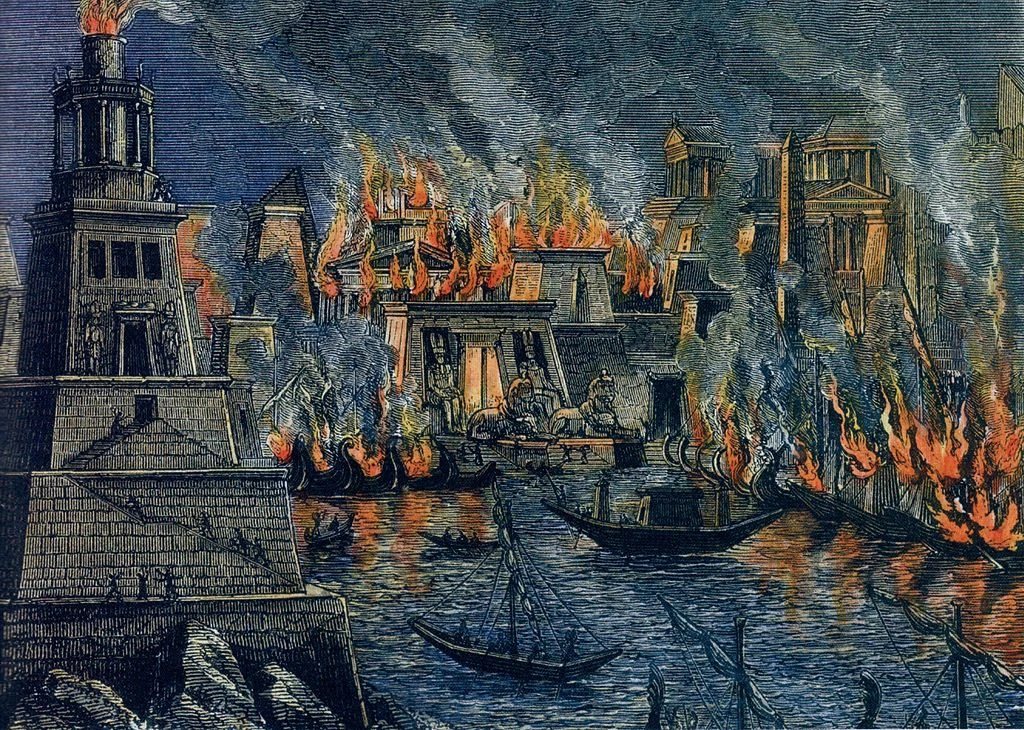

Violating every principle of book conservation, CELL is a library designed to evoke the impermanence and chaos of cellular biology.

CELL is situated in the middle of a marsh (intercellular matrix); readers arrive by boat to any of four landings (alpha-hemolysin complexes).

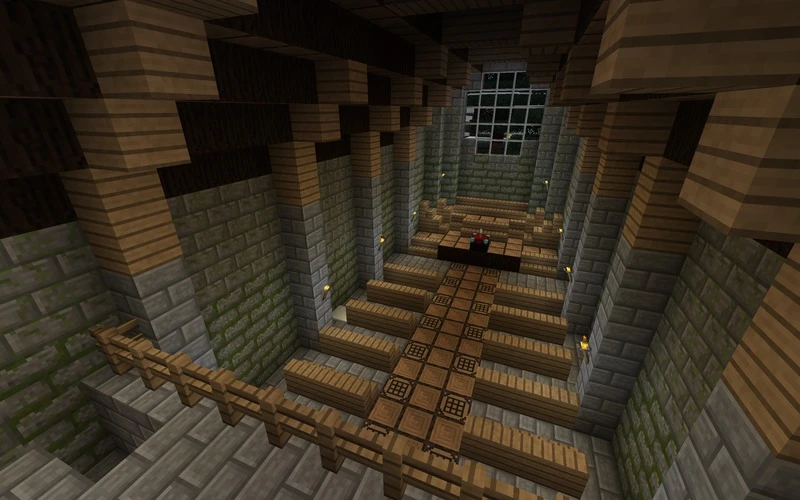

Typically my taste in architecture is functional, spartan, and modern–but there’s a great deal to be said for “A Pattern Language”. As I approach the end of the book, I’ve started to question, apply, and practice these patterns in Minecraft structures. This structure is a cabin for a small family or group of friends.

Site

You guys, we have to talk about Saltillo.

This dude is nuts. He emits high-octane nightmare fuel as a byproduct from an inexplicable process of self-discovery the likes of which I’ve never seen. His art is disturbing as fuck. But the music–oh man, this is good stuff.

This is total conjecture; please correct me in the comments, because I don’t understand finance at all. This is from a physics standpoint.

In markets, money flows against an information gradient. Traders with perfect knowledge of a stock’s value in the future can make trades with no risk, yielding the highest expectation values of returns E[R]. Traders with zero knowledge of the stock’s value have the worst expectation value. If the market is conservative–that is to say, there is no money added or lost inside the market itself; a stock sells for x dollars and is purchased for x dollars in each transaction, the sum of all expectation values over traders

S = Σ0^n E[R(tradern)]

In response to Results of the 2012 State of Clojure Survey:

The idea of having a primary language honestly comes off to me as a sign that the developer hasn't spent much time programming yet: the real world has so many languages in it, and many times the practical choice is constrained by that of the platform or existing code to interoperate with.

I’ve been writing code for ~18 years, ~10 professionally. I’ve programmed in (chronological order here) Modula-2, C, Basic, the HTML constellation, Perl, XSLT, Ruby, PHP, Java, Mathematica, Prolog, C++, Python, ML, Erlang, Haskell, Clojure, and Scala. I can state unambiguously that Clojure is my primary language: it is the most powerful, the most fun, and has the fewest tradeoffs.

More from Hacker News. I figure this might be of interest to folks working on parallel systems. I’ll let KirinDave kick us off with:

Go scales quite well across multiple cores iff you decompose the problem in a way that's amenable to Go's strategy. Same with Erlang.No one is making “excuses”. It’s important to understand these problems. Not understanding concurrency, parallelism, their relationship, and Amdahl’s Law is what has Node.js in such trouble right now.

Our last full day in New Zealand, I rented a Ninjette from the folks over at nzbike.com. $70 for a full day, maybe $90 with petrol included. I headed north from Auckland on 16, found mile after mile of well-paved, well-paced roads with good cadence and spectacular scenery. Enjoyed getting completely lost in a generally northeasterly direction, hit the eastern coast, took in the surf, and hugged the coast south. It’s all farms: one lane bridges over creeks, limestone hillocks, and the occasional jaw-dropping valley opened up in the sun. Hit some dirt, a few dead ends, and generally had a grand time of it.

Everyone, everywhere, was thrilled to say hello, tell me about their farm or flower shop, and give directions. I don’t think I met a single unfriendly soul in the whole darn country. Even petrol station attendants grinned, warned me about storms to the north and pointed a better route. Maybe it’s a factor of size, maybe it’s purely cultural, but I wish the rural US were anywhere near this friendly.

Sunset lasted three hours. I don’t know how to describe it, and it doesn’t come through in photos. Been trying for the last eight hours to find an exposure that fits, but nothing feels right. The light is warm, hangs in the air thickly, coats the landscape in dusty gold.

This is a response to a Hacker News thread asking about concurrency vs parallelism.

Concurrency is more than decomposition, and more subtle than “different pieces running simultaneously.” It’s actually about causality.

Two operations are concurrent if they have no causal dependency between them.