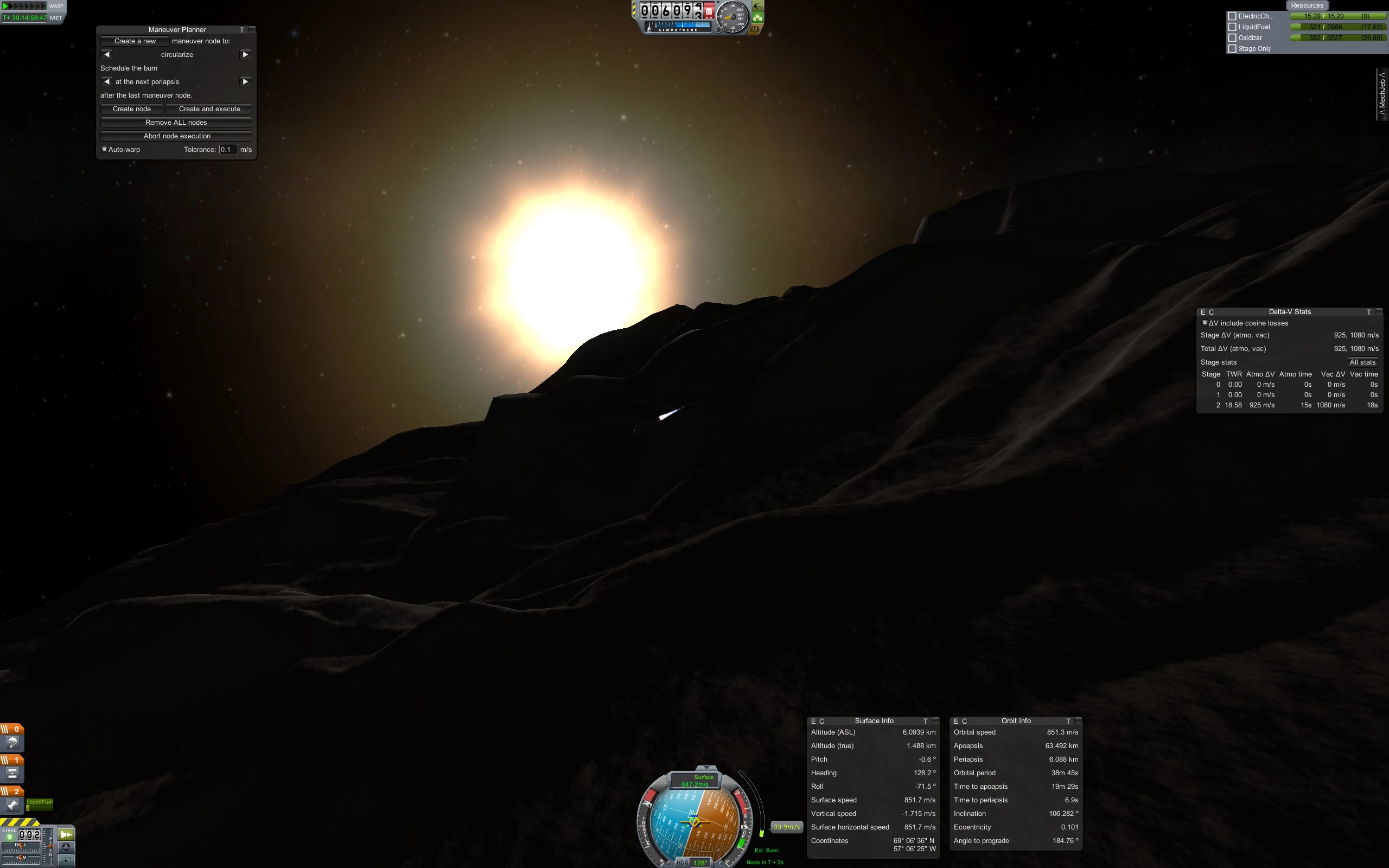

Kerbal Space Program is a game that, at its best, conveys the grandeur and majesty of space exploration. I suggest you put on an appropriate soundtrack and imagine the long, slow descent to the surface of the moon, in a craft surrounded by millions of miles of vacuum. Having traveled for days, shedding propellant and stages along your journey, until finally, your final stage hovers, whispering to a halt on jets of burning breath from your ancestral homeland–

one wheel touches–

Somewhere around January of 2014, I discovered Kerbal Space Program.

This was my first successful craft, which lifted off, flew a short distance from the pad, then (after several botched attempts and the deaths of several Kerbal pilots) touched down.

Prey is an perfectly serviceable AAA-class, science-fiction survival thriller game. It’s a first-person shooter, a stealth adventure, and a surprisingly enjoyable platformer. The plot is all right. The art is iconoclastic and gorgeous. As is traditional for the genre, much of the storytelling transpires through the environment: emails, voice logs, and diorama. There are some lovely ethical questions, both abstract and reified. For some reason, these are the things that people talk about when they talk about Prey.

After all, Prey is a videogame, and gameplay, art direction, and story are how we read videogames as texts. But I’d like to step back for a moment and talk about Prey’s symbolic and thematic choices, which are absolutely fucking fascinating. Spoilers ahead.

Penetration and Containment

Previously: Hexing the technical interview.

In the formless days, long before the rise of the Church, all spells were woven of pure causality, all actions were permitted, and death was common. Many witches were disfigured by their magicks, found crumpled at the center of a circle of twisted, glass-eaten trees, and stones which burned unceasing in the pooling water; some disappeared entirely, or wandered along the ridgetops: feet never touching earth, breath never warming air.

As a child, you learned the story of Gullveig-then-Heiðr, reborn three times from the fires of her trial, who traveled the world performing seidr: the reading and re-weaving of the future. Her foretellings (and there were many) were famed—spoken of even by the völva-beyond-the-world—but it was her survival that changed history. Through the ecstatic trance of seidr, she foresaw her fate, and conquered death. Her spells would never crash, and she became a friend to outcast women: the predecessors of your kind. It is said that Odin himself learned immortality from her.

Previously: Reversing the technical interview.

Long ago, on Svalbard, when you were a young witch of forty-three, your mother took your unscarred wrists in her hands, and spoke:

Vidrun, born of the sea-wind through the spruce

Vidrun, green-tinged offshoot of my bough, joy and burden of my life

Vidrun, fierce and clever, may our clan’s wisdom be yours:Never read Hacker News

If you want to get a job as a software witch, you’re going to have to pass a whiteboard interview. We all do them, as engineers–often as a part of our morning ritual, along with arranging a beautiful grid of xterms across the astral plane, and compulsively running ls in every nearby directory–just in case things have shifted during the night–the incorporeal equivalent of rummaging through that drawer in the back of the kitchen where we stash odd flanges, screwdrivers, and the strangely specific plastic bits: the accessories, those long-estranged black sheep of the families of our household appliances, their original purpose now forgotten, perhaps never known, but which we are bound to care for nonetheless. I’d like to walk you through a common interview question: reversing a linked list.

First, we need a linked list. Clear your workspace of unwanted xterms, sprinkle salt into the protective form of two parentheses, and recurse. Summon a list from the void.

(defn cons [h t] #(if % h t))

Last fall, I worked with CockroachDB to review and extend their Jepsen test suite. We found new bugs leading to serializability violations, improved documentation, and demonstrated documented behavior around nonlinearizable multi-key transactions. You can read the full analysis on jepsen.io.

This fall, I worked with MongoDB to design a new Jepsen test for MongoDB. We discovered design flaws in the v0 replication protocol, plus implementation bugs in the v1 protocol, both of which allowed for the loss of majority-committed updates. While the v0 protocol remains broken, patches for v1 are available in MongoDB 3.2.12 and 3.4.0, and now pass the expanded Jepsen test suite.

You can read the full analysis at jepsen.io.

In Herlihy and Wing’s seminal paper introducing linearizability, they mention an important advantage of this consistency model:

Unlike alternative correctness conditions such as sequential consistency [31] or serializability [40], linearizability is a local property: a system is linearizable if each individual object is linearizable.

Locality is important because it allows concurrent systems to be designed and constructed in a modular fashion; linearizable objects can be implemented, verified, and executed independently. A concurrent system based on a nonlocal correctness property must either rely on a centralized scheduler for all objects, or else satisfy additional constraints placed on objects to ensure that they follow compatible scheduling protocols.

This advantage is not shared by sequential consistency, or its multi-object cousin, serializability. This much, I knew–but Herlihy & Wing go on to mention, almost offhand, that strict serializability is also nonlocal!

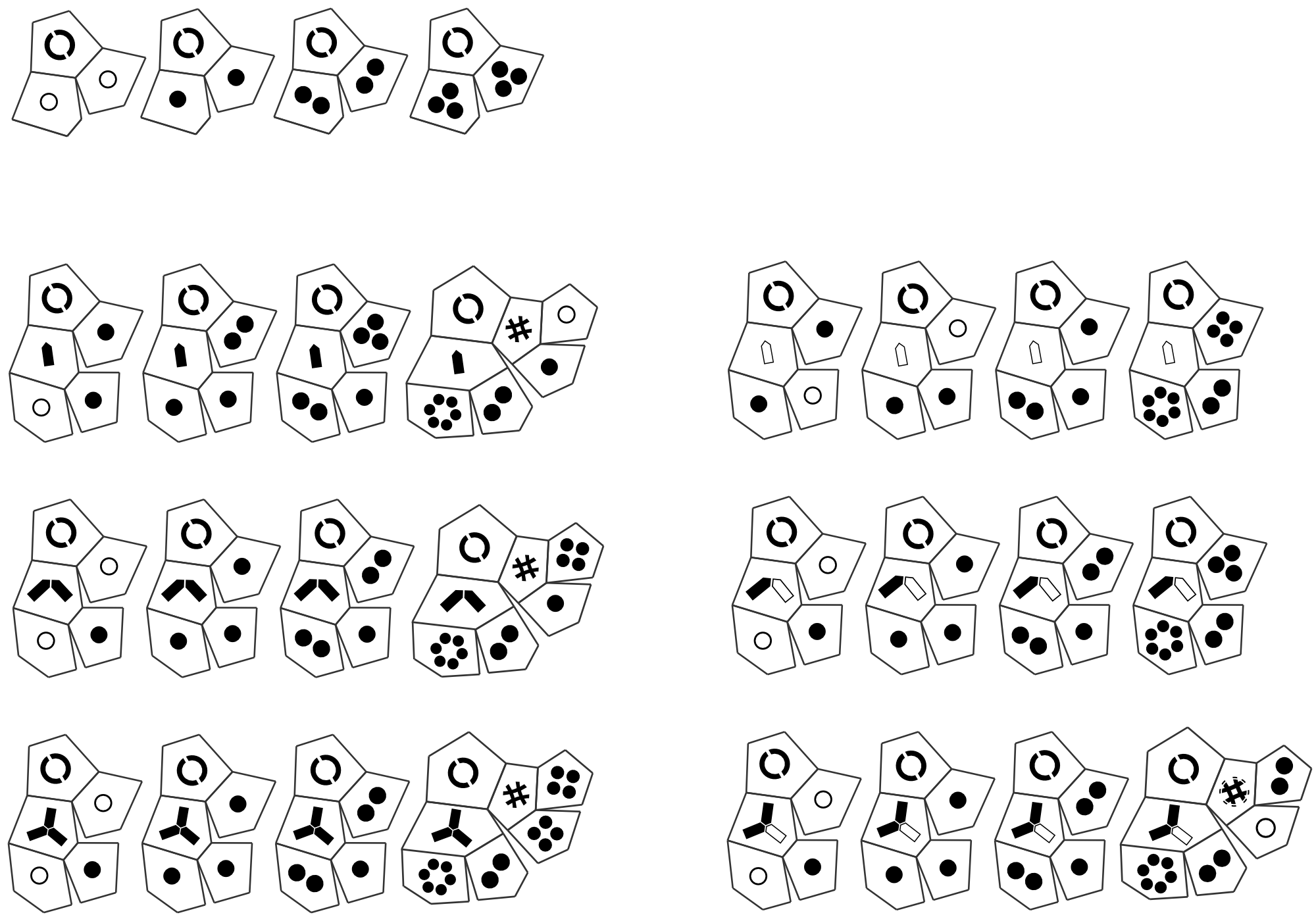

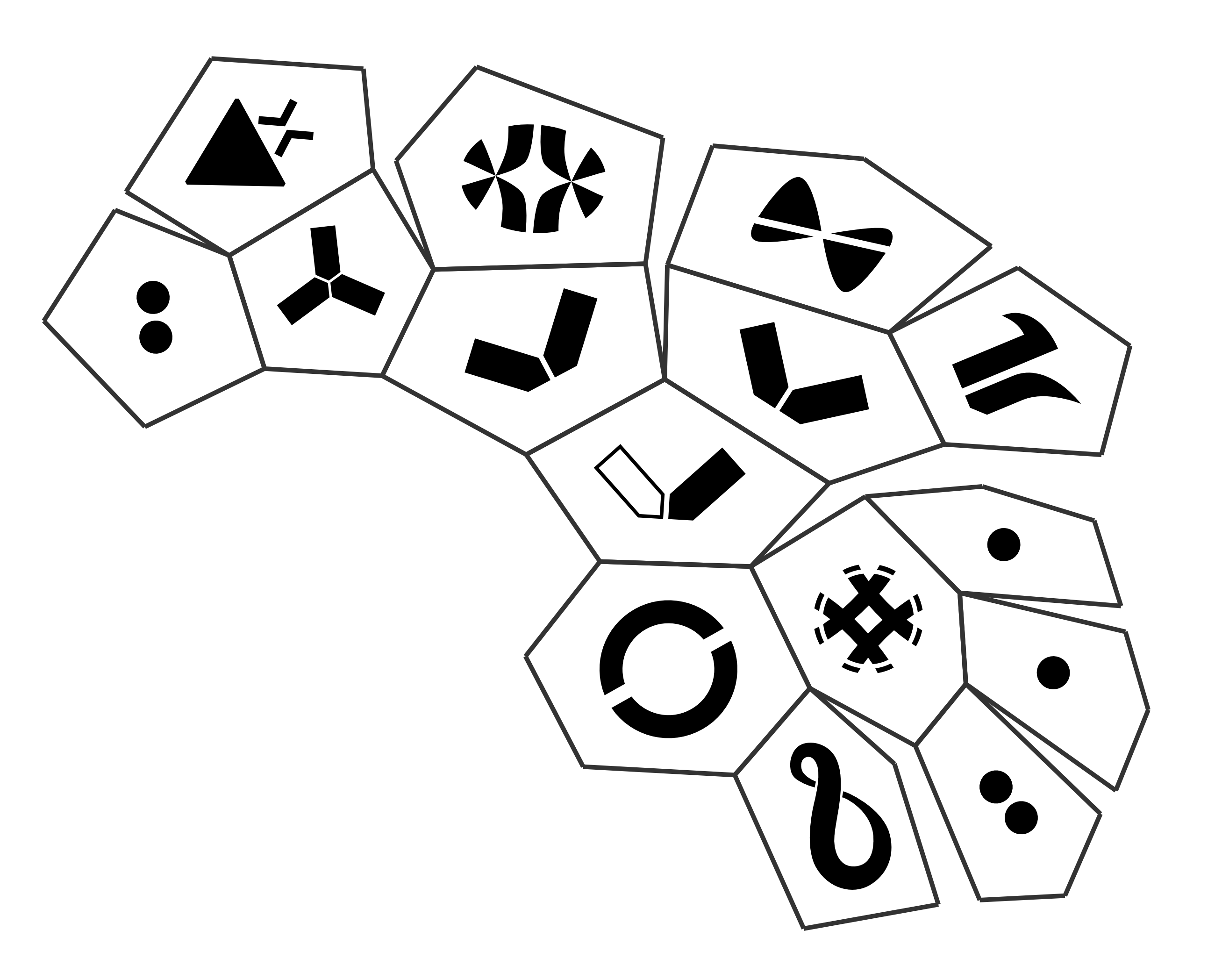

I finished my tattoo last night. If you like puzzles, here’s a primer for the language, and the design itself. You’ll need some basic algebra for the primer, and a little domain knowledge–or a few Google queries–for the tattoo proper.

These are unpolished thoughts. I started playing again for sources and to refine these ideas, but the game crashes so often that I’m giving up. Still think some folks might find this interesting. Spoilers everywhere.

In the opening, Davey notes that the CounterStrike level appears to be a desert town, but Coda has scattered these floating boxes and out-of-place, brightly-colored cubes in the level: a reminder that the game is not exactly what it purports to be. “Calling cards”, he calls them. A reminder that the game was created by a real person. “They are all going to give us access to their creator. I want to see past the games themselves. I want to know who the real person is.”

Jump up a level. We’re not just interested in learning about Coda as a person. We’re interested in understanding Davey as a person, too. What were the rough circumstances in Davey’s life? How did Coda’s work help? If each level tells us something about Coda, the purported author, then The Beginner’s Guide tells us something about Davey. And in another sense, the characters of Davey and Coda tells us something about the real Davey, and the people on his team. For instance, regardless of how we interpret the narrative’s characters, it’s likely safe to say that the real Davey is interested in questions of authorial intent.