Op Color Plots

A lot of my work involves staring at visualizations trying to get an intuitive feeling for what a system is doing. I’ve been working on a new visualization for Jepsen, a distributed systems testing library. This is something I’ve had in the back of my head for years but never quite got around to.

A Jepsen test records a history of operations. Those operations often come in a few different flavors. For instance, if we’re testing a queue, we might send messages into the queue, and try to read them back at the end. It would be bad if some messages didn’t come back; that could mean data loss. It would also be bad if messages came out that were never enqueued; that could signify data corruption. A Jepsen checker for a queue might build up some data structures with statistics and examples of these different flavors: which records were lost, unexpected, and so on. Here’s an example from the NATS test I’ve been working on this month:

{:valid? false,

:attempt-count 529583,

:acknowledged-count 529369,

:read-count 242123,

:ok-count 242123,

:recovered-count 3

:hole-count 159427,

:lost-count 287249,

:unexpected-count 0,

:lost #{"110-6014" ... "86-8234"},

:holes #{"110-4072" ... "86-8234"},

:unexpected #{}}

Why is Jepsen Written in Clojure?

People keep asking why Jepsen is written in Clojure, so I figure it’s worth having a referencable answer. I’ve programmed in something like twenty languages. Why choose a Weird Lisp?

Jepsen is built for testing concurrent systems–mostly databases. Because it tests concurrent systems, the language itself needs good support for concurrency. Clojure’s immutable, persistent data structures make it easier to write correct concurrent programs, and the language and runtime have excellent concurrency support: real threads, promises, futures, atoms, locks, queues, cyclic barriers, all of java.util.concurrent, etc. I also considered languages (like Haskell) with more rigorous control over side effects, but decided that Clojure’s less-dogmatic approach was preferable.

Because Jepsen tests databases, it needs broad client support. Almost every database has a JVM client, typically written in Java, and Clojure has decent Java interop.

10⁹ Operations: Large Histories with Jepsen

Jepsen is a library for writing tests of concurrent systems: everything from single-node data structures to distributed databases and queues. A key part of this process is recording a history of operations performed during the test. Jepsen checkers analyze a history to find consistency anomalies and to compute performance metrics. Traditionally Jepsen has stored the history in a Clojure vector (an immutable in-memory data structure like an array), and serialized it to disk at the end of the test. This limited Jepsen to histories on the order of tens of millions of operations. It also meant that if Jepsen crashed during a several-hour test run, it was impossible to recover any of the history for analysis. Finally, saving and loading large tests involved long wait times—sometimes upwards of ten minutes.

Over the last year I’ve been working on ways to resolve these problems. Generators are up to ten times faster. A new operation datatype makes each operation smaller and faster to access. Jepsen’s new on-disk format allows us to stream histories incrementally to disk, to work with histories of up to a billion operations far exceeding available memory, to recover safely from crashes, and to load tests almost instantly by deserializing data lazily. New history datatypes support both densely and sparsely indexed histories, and efficiently cache auxiliary indices. They also support lazy disk-backed map and filter. These histories support both linear and concurrent folds, which dramatically improves checker performance on multicore systems: real-world checkers can readily analyze 250,000 operations/sec. Histories support multi-query optimization: when multiple threads fold over the same history, a query planner automatically fuses those folds together to perform them in a single pass. Since Jepsen often folds dozens of times over the same history, this saves a good deal of disk IO and deserialization time. These features are enabled by a new, transactional, dependency-aware task executor.

Jepsen: CockroachDB beta-20160829

Last fall, I worked with CockroachDB to review and extend their Jepsen test suite. We found new bugs leading to serializability violations, improved documentation, and demonstrated documented behavior around nonlinearizable multi-key transactions. You can read the full analysis on jepsen.io.

Jepsen: MongoDB 3.4.0-rc3

This fall, I worked with MongoDB to design a new Jepsen test for MongoDB. We discovered design flaws in the v0 replication protocol, plus implementation bugs in the v1 protocol, both of which allowed for the loss of majority-committed updates. While the v0 protocol remains broken, patches for v1 are available in MongoDB 3.2.12 and 3.4.0, and now pass the expanded Jepsen test suite.

You can read the full analysis at jepsen.io.

Jepsen: VoltDB 6.3

In the last Jepsen post, we found that RethinkDB could lose data when a network partition occurred during cluster reconfiguration. In this analysis, we’ll show that although VoltDB 6.3 claims strict serializability, internal optimizations and bugs lead to stale reads, dirty reads, and even lost updates; fixes are now available in version 6.4. This work was funded by VoltDB, and conducted in accordance with the Jepsen ethics policy.

VoltDB is a distributed SQL database intended for high-throughput transactional workloads on datasets which fit entirely in memory. All data is stored in RAM, but backed by periodic disk snapshots and an on-disk recovery log for crash durability. Data is replicated to at least k+1 nodes to tolerate k failures. Tables may be replicated to every node for fast local reads, or sharded for linear storage scalability.

As an SQL database, VoltDB supports the usual ad-hoc SQL statements, with some caveats (e.g. no auto-increment, no foreign key constraints, etc.) However, its approach to multi-statement transactions is distinct: instead of BEGIN ... COMMIT, VoltDB transactions are expressed as stored procedures, either in SQL or Java. Stored procedures must be deterministic across nodes (a constraint checked by hashing and comparing their resulting SQL statements), which allows VoltDB to pipeline transaction execution given a consensus on transaction order.

Jepsen: Crate 0.54.9 version divergence

In the last Jepsen analysis, we saw that RethinkDB 2.2.3 could encounter spectacular failure modes due to cluster reconfiguration during a partition. In this analysis, we’ll talk about Crate, and find out just how many versions a row’s version identifies.

Crate is a shared-nothing, “infinitely scalable”, eventually-consistent SQL database built on Elasticsearch.

Because Elasticsearch has and continues to lose and corrupt data in response to network partitions and other faults, some might question whether Elasticsearch is appropriate for a primary data store. Crate’s co-founders knew about these hazards, and promised to publish fault-tolerance documentation in October 2014.

Jepsen: RethinkDB 2.2.3 reconfiguration

In the previous Jepsen analysis of RethinkDB, we tested single-document reads, writes, and conditional writes, under network partitions and process pauses. RethinkDB did not exhibit any nonlinearizable histories in those tests. However, testing with more aggressive failure modes, on both 2.1.5 and 2.2.3, has uncovered a subtle error in Rethink’s cluster membership system. This error can lead to stale reads, dirty reads, lost updates, node crashes, and table unavailability requiring an unsafe emergency repair. Versions 2.2.4 and 2.1.6, released last week, address this issue.

Until now, Jepsen tests have used a stable cluster membership throughout the test. We typically run the system being tested on five nodes, and although the network topology between the nodes may change, processes may crash and restart, and the system may elect new nodes as leaders, we do not introduce or remove nodes from the system while it is running. Thus far, we haven’t had to go that far to uncover concurrency errors.

Since RethinkDB passed its stable-membership partitioning tests, I offered the team a more aggressive failure model: we’d dynamically reconfigure the cluster membership during the test. This is a harder problem than consensus with fixed membership: both old and new nodes must gracefully agree on the membership change, ensure that both sets of nodes will agree on any operations performed during the handover, and finally transition to normal consensus on the new set of nodes. The delicate handoff of operations from old nodes to new provides ample opportunities for mistakes.

Jepsen: RethinkDB 2.1.5

In this Jepsen report, we’ll verify RethinkDB’s support for linearizable operations using majority reads and writes, and explore assorted read and write anomalies when consistency levels are relaxed. This work was funded by RethinkDB, and conducted in accordance with the Jepsen ethics policy.

RethinkDB is an open-source, horizontally scalable document store. Similar to MongoDB, documents are hierarchical, dynamically typed, schemaless objects. Each document is uniquely identified by an id key within a table, which in turn is scoped to a DB. On top of this key-value structure, a composable query language allows users to operate on data within documents, or across multiple documents–performing joins, aggregations, etc. However, only operations on a single document are atomic–queries which access multiple keys may read and write inconsistent data.

RethinkDB shards data across nodes by primary key, maintaining replicas of each key across n nodes for redundancy. For each shard, a single replica is designated a primary, which serializes all updates (and strong reads) to that shard’s documents–allowing linearizable writes, updates, and reads against a single key.

Jepsen: Percona XtraDB Cluster

Percona’s CTO Vadim Tkachenko wrote a response to my Galera Snapshot Isolation post last week. I think Tkachenko may have misunderstood some of my results, and I’d like to clear those up now. I’ve ported the MariaDB tests to Percona XtraDB Cluster, and would like to confirm that using exclusive write locks on all reads, as Tkachenko recommends, can recover serializable histories. Finally, we’ll address Percona’s documentation.

I didn’t use the default isolation levels

But there I need to add quite IMPORTANT addition: it may leave data in inconsistent state if you use SPECIAL TYPE of transactions in default isolation levels that Aphyr uses in his test.

Jepsen: MariaDB Galera Cluster

Previously, on Jepsen, we saw Chronos fail to run jobs after a network partition. In this post, we’ll see MariaDB Galera Cluster allow transactions to read partially committed state.

Galera Cluster extends MySQL (and MySQL’s fork, MariaDB) to clusters of machines, all of which support reads and writes. It uses a group communication system to broadcast writesets and certify each for use. Unlike most Postgres replication systems, it handles the failure and recovery of all nodes automatically, and unlike MySQL Cluster, it has only one (as opposed to three) types of node. The MariaDB Galera packages are particularly easy to install and configure.

Galera Cluster uses the normal InnoDB isolation levels locally–but we’re interested in cluster-wide consistency guarantees. Between nodes, Galera claims to implement Snapshot Isolation–a reasonably strong consistency model.

Jepsen: Chronos

Chronos is a distributed task scheduler (cf. cron) for the Mesos cluster management system. In this edition of Jepsen, we’ll see how simple network interruptions can permanently disrupt a Chronos+Mesos cluster

Chronos relies on Mesos, which has two flavors of node: master nodes, and slave nodes. Ordinarily in Jepsen we’d refer to these as “primary” and “secondary” or “leader” and “follower” to avoid connotations of, well, slavery, but the master nodes themselves form a cluster with leaders and followers, and terms like “executor” have other meanings in Mesos, so I’m going to use the Mesos terms here.

Mesos slaves connect to masters and offer resources like CPU, disk, and memory. Masters take those offers and make decisions about resource allocation using frameworks like Chronos. Those decisions are sent to slaves, which actually run tasks on their respective nodes. Masters form a replicated state machine with a persistent log. Both masters and slaves rely on Zookeeper for coordination and discovery. Zookeeper is also a replicated persistent log.

Jepsen: Aerospike

Previously, on Jepsen, we reviewed Elasticsearch’s progress in addressing data-loss bugs during network partitions. Today, we’ll see Aerospike 3.5.4, an “ACID database”, react violently to a basic partition.

[Update, 2018-03-07] See the followup analysis of 3.99.0.3

Aerospike is a high-performance, distributed, schema-less, KV store, often deployed in caching, analytics, or ad tech environments. Its five-dimensional data model is similar to Bigtable or Cassandra: namespaces (databases) contain sets (tables) of records, where keys identify records. Each record is a map of bin names to values. Aerospike has put a good deal of work into performance across good-size (~100TB) datasets, and is repositioning itself as a general purpose datastore competitive with, say, MongoDB.

Jepsen: Elasticsearch 1.5.0

Previously, on Jepsen, we demonstrated stale and dirty reads in MongoDB. In this post, we return to Elasticsearch, which loses data when the network fails, nodes pause, or processes crash.

Nine months ago, in June 2014, we saw Elasticsearch lose both updates and inserted documents during transitive, nontransitive, and even single-node network partitions. Since then, folks continue to refer to the post, often asking whether the problems it discussed are still issues in Elasticsearch. The response from Elastic employees is often something like this:

Jepsen: MongoDB stale reads

Please note: our followup analysis of 3.4.0-rc3 revealed additional faults in MongoDB’s replication algorithms which could lead to the loss of acknowledged documents–even with Majority Write Concern, journaling, and fsynced writes.

In May of 2013, we showed that MongoDB 2.4.3 would lose acknowledged writes at all consistency levels. Every write concern less than MAJORITY loses data by design due to rollbacks–but even WriteConcern.MAJORITY lost acknowledged writes, because when the server encountered a network error, it returned a successful, not a failed, response to the client. Happily, that bug was fixed a few releases later.

Since then I’ve improved Jepsen significantly and written a more powerful analyzer for checking whether or not a system is linearizable. I’d like to return to Mongo, now at version 2.6.7, to verify its single-document consistency. (Mongo 3.0 was released during my testing, and I expect they’ll be hammering out single-node data loss bugs for a little while.)

Jepsen: Elasticsearch

This post covers Elasticsearch 1.1.0. In the months since its publication, Elasticsearch has added a comprehensive overview of correctness issues and their progress towards fixing some of these bugs.

Jepsen: etcd and Consul

In the previous post, we discovered the potential for data loss in RabbitMQ clusters. In this oft-requested installation of the Jepsen series, we’ll look at etcd: a new contender in the CP coordination service arena. We’ll also discuss Consul’s findings with Jepsen.

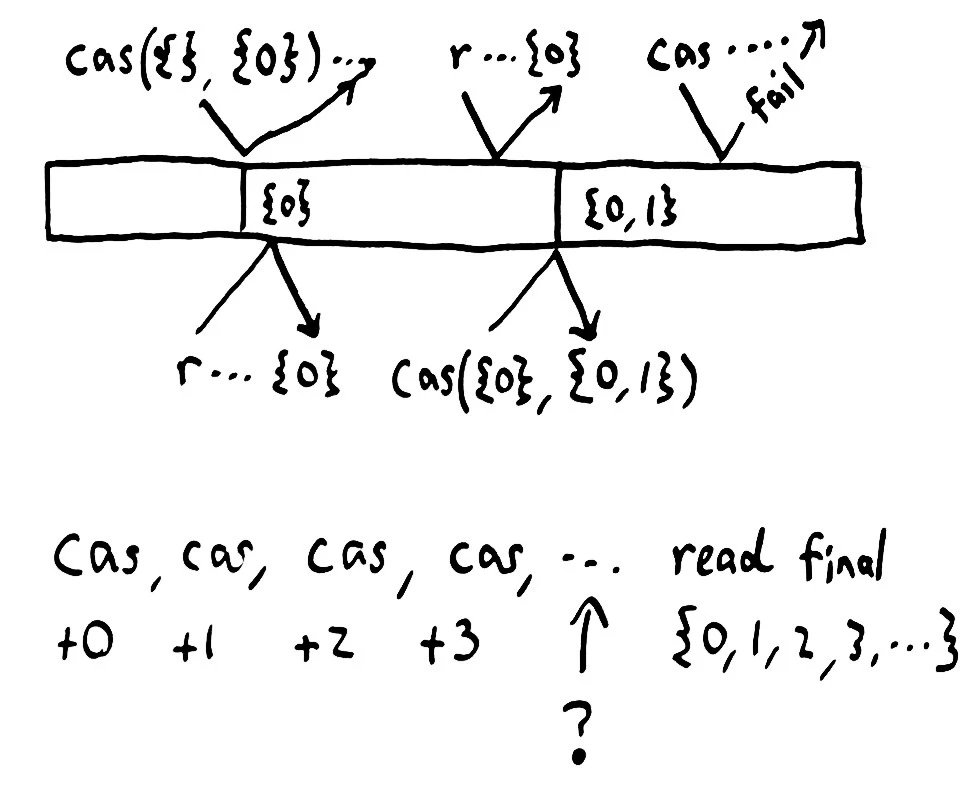

Like Zookeeper, etcd is designed to store small amounts of strongly-consistent state for coordination between services. It exposes a tree of logical nodes; each identified by a string key, containing a string value, and with a version number termed an index–plus, potentially, a set of child nodes. Everything’s exposed as JSON over an HTTP API.

Etcd is often used for service discovery, distributed locking, atomic broadcast, sequence numbers, and pointers to data in eventually consistent stores. Because etcd offers atomic compare-and-set by both value and version index, it’s a powerful primitive in building other distributed systems.

Jepsen: RabbitMQ

RabbitMQ is a distributed message queue, and is probably the most popular open-source implementation of the AMQP messaging protocol. It supports a wealth of durability, routing, and fanout strategies, and combines excellent documentation with well-designed protocol extensions. I’d like to set all these wonderful properties aside for a few minutes, however, to talk about using your queue as a lock service. After that, we’ll explore RabbitMQ’s use as a distributed fault-tolerant queue.

Computational techniques in Knossos

Earlier versions of Jepsen found glaring inconsistencies, but missed subtle ones. In particular, Jepsen was not well equipped to distinguish linearizable systems from sequentially or causally consistent ones. When people asked me to analyze systems which claimed to be linearizable, Jepsen could rule out obvious classes of behavior, like dropping writes, but couldn’t tell us much more than that. Since users and vendors are starting to rely on Jepsen as a basic check on correctness, it’s important that Jepsen be able to identify true linearization errors.

Strong consistency models

Update, 2018-08-24: For a more complete, formal discussion of consistency models, see jepsen.io.

Network partitions are going to happen. Switches, NICs, host hardware, operating systems, disks, virtualization layers, and language runtimes, not to mention program semantics themselves, all conspire to delay, drop, duplicate, or reorder our messages. In an uncertain world, we want our software to maintain some sense of intuitive correctness.

Well, obviously we want intuitive correctness. Do The Right Thing(TM)! But what exactly is the right thing? How might we describe it? In this essay, we’ll take a tour of some “strong” consistency models, and see how they fit together.

Jepsen: Redis redux

In a recent blog post, antirez detailed a new operation in Redis: WAIT. WAIT is proposed as an enhancement to Redis’ replication protocol to reduce the window of data loss in replicated Redis systems; clients can block awaiting acknowledgement of a write to a given number of nodes (or time out if the given threshold is not met). The theory here is that positive acknowledgement of a write to a majority of nodes guarantees that write will be visible in all future states of the system.

As I explained earlier, any asynchronously replicated system with primary-secondary failover allows data loss. Optional synchronous replication, antirez proposes, should make it possible for Redis to provide strong consistency for those operations.

WAIT means that if you run three nodes A, B, C where every node contains a Sentinel instance and a Redis instance, and you “WAIT 1” after every operation to reach the majority of slaves, you get a consistent system.

WAIT can be also used, by improving the failover procedure, in order to have a strong consistent system (no writes to the older master from the point the failure detection is positive, to the end of the failover when the configuration is updated, or alternative, disconnect the majority of slaves you can reach during the failure detection so that every write will fail during this time).

Jepsen: Strangeloop Hangout

Since the Strangeloop talks won’t be available for a few months, I recorded a new version of the talk as a Google Hangout.

Jepsen: Cassandra

Previously on Jepsen, we learned about Kafka’s proposed replication design.

Cassandra is a Dynamo system; like Riak, it divides a hash ring into a several chunks, and keeps N replicas of each chunk on different nodes. It uses tunable quorums, hinted handoff, and active anti-entropy to keep replicas up to date. Unlike the Dynamo paper and some of its peers, Cassandra eschews vector clocks in favor of a pure last-write-wins approach.

Some Write Loses

Jepsen: Kafka

In the last Jepsen post, we learned about NuoDB. Now it’s time to switch gears and discuss Kafka. Up next: Cassandra.

Kafka is a messaging system which provides an immutable, linearizable, sharded log of messages. Throughput and storage capacity scale linearly with nodes, and thanks to some impressive engineering tricks, Kafka can push astonishingly high volume through each node; often saturating disk, network, or both. Consumers use Zookeeper to coordinate their reads over the message log, providing efficient at-least-once delivery–and some other nice properties, like replayability.

Jepsen: NuoDB

Previously on Jepsen, we explored Zookeeper. Next up: Kafka.

NuoDB came to my attention through an amazing mailing list thread by the famous database engineer Jim Starkey, in which he argues that he has disproved the CAP theorem:

The CAP conjecture, I am convinced, is false and can be proven false.

The CAP conjecture has been a theoretical millstone around the neck of all ACID systems. Good riddance.

This is the first wooden stake for the heart of the noSQL movement. There are more coming.

Jepsen: Zookeeper

In this Jepsen post, we’ll explore Zookeeper. Up next: NuoDB.

Update 2019-07-23: @insumity explains that ZooKeeper sync+read is not, in fact, linearizable–there are conditions under which it might return stale reads.

Zookeeper, or ZK for short, is a distributed CP datastore based on a consensus protocol called ZAB. ZAB is similar to Paxos in that it offers linearizable writes and is available whenever a majority quorum can complete a round, but unlike the Paxos papers, places a stronger emphasis on the role of a single leader in ensuring the consistency of commits.

Automating Jepsen

If you, as a database vendor, implement a few features in your API, I can probably offer repeatable automated tests of your DB’s partition tolerance through Jepsen.

The outcome of these tests would be a set of normalized metrics for each DB like “supports linearizability”, “available for writes when a majority partition exists”, “available for writes when no majority available”, “fraction of writes successful”, “fraction of writes denied”, “fraction of writes acked then lost”, “95th latency during condition X”, and so forth. I’m thinking this would be a single-page web site–a spreadsheet, really–making it easy to compare and contrast DBs and find one that fits your safety needs.

At a minimum, I need to know:

The network is reliable

I’ve been discussing Jepsen and partition tolerance with Peter Bailis over the past few weeks, and I’m honored to present this post as a collaboration between the two of us. We’d also like to extend our sincere appreciation to everyone who contributed their research and experience to this piece.

Network partitions are a contentious subject. Some claim that modern networks are reliable and that we are too concerned with designing for theoretical failure modes. They often accept that single-node failures are common but argue that we can reliably detect and handle them. Conversely, others subscribe to Peter Deutsch’s Fallacies of Distributed Computing and disagree. They attest that partitions do occur in their systems, and that, as James Hamilton of Amazon Web Services neatly summarizes, “network partitions should be rare but net gear continues to cause more issues than it should.” The answer to this debate radically affects the design of distributed databases, queues, and applications. So who’s right?

Asynchronous replication with failover

In response to my earlier post on Redis inconsistency, Antirez was kind enough to help clarify some points about Redis Sentinel’s design.

Jepsen: final thoughts

Previously in Jepsen, we discussed Riak. Now we’ll review and integrate our findings.

This was a capstone post for the first four Jepsen posts; it is not the last post in the series. I’ve continued this work in the years since and produced several more posts.

We started this series with an open problem.

Jepsen: MongoDB

Previously in Jepsen, we discussed Redis. In this post, we’ll see MongoDB drop a phenomenal amount of data. See also: followup analyses of 2.6.7 and 3.4.0-rc3.

MongoDB is a document-oriented database with a similar distribution design to Redis. In a replica set, there exists a single writable primary node which accepts writes, and asynchronously replicates those writes as an oplog to N secondaries. However, there are a few key differences.

First, Mongo builds in its leader election and replicated state machine. There’s no separate system which tries to observe a replica set in order to make decisions about what it should do. The replica set decides among itself which node should be primary, when to step down, how to replicate, etc. This is operationally simpler and eliminates whole classes of topology problems.

Jepsen: On the perils of network partitions

This article is part of Jepsen, a series on network partitions. We’re going to learn about distributed consensus, discuss the CAP theorem’s implications, and demonstrate how different databases behave under partition.

Jepsen: Redis

Previously on Jepsen, we explored two-phase commit in Postgres. In this post, we demonstrate Redis losing 56% of writes during a partition.

Redis is a fantastic data structure server, typically deployed as a shared heap. It provides fast access to strings, lists, sets, maps, and other structures with a simple text protocol. Since it runs on a single server, and that server is single-threaded, it offers linearizable consistency by default: all operations happen in a single, well-defined order. There’s also support for basic transactions, which are atomic and isolated from one another.

Because of this easy-to-understand consistency model, many users treat Redis as a message queue, lock service, session store, or even their primary database. Redis running on a single server is a CP system, so it is consistent for these purposes.

Jepsen: Postgres

Previously on Jepsen, we introduced the problem of network partitions. Here, we demonstrate that a few transactions which “fail” during the start of a partition may have actually succeeded.

Postgresql is a terrific open-source relational database. It offers a variety of consistency guarantees, from read uncommitted to serializable. Because Postgres only accepts writes on a single primary node, we think of it as a CP system in the sense of the CAP theorem. If a partition occurs and you can’t talk to the server, the system is unavailable. Because transactions are ACID, we’re always consistent.

Right?